Enabling industrial hyperspectral imaging

The Perception Core is a data processing core that supports a comprehensive set of methodologies to enable industrial hyperspectral imaging.

The Perception Core is a data processing core that supports a comprehensive set of methodologies to enable industrial hyperspectral imaging.

When doing hyperspectral imaging, a number of concerns have to be taken into account. The Perception Core provides data processing methodology to overcome needs, arising in the entire chain of hyperspectral application.

For industrial inline applications, the Perception Core is able to work as a stand-alone system after being configured by the Perception Studio software. Less time-critical data processing issues can be handled by facilitating the off-line core, e.g. by accessing through a Perception Studio plug-in.

The Perception Core is able to output spectroscopic data, feature data (value describing a property) and or decision data (like class information) per object pixel.

Functionality

Sensor Data Preprocessing

- Sensor Noise Suppression (Filtering by windowing (FIR))

- Dark Current Suppression

- Optical distortion correction (Smile & Keystone) *)

- Wavelength Calibration

- Illumination non-uniformity correction (e.g. balancing to white target)

- Defect pixel interpolation

- Pixel behavior unifying *)

- Region of interest

Hyperspectral Preprocessing

- Filtering by windowing (FIR) like Savitzky-Golay

- Derivation (e.g. Savitzky Golay)

- Normalization (Min/Max, absolute normalization)

Feature Extraction

- Physically Features (e.g. degree of reflectance)

- Statistical Features (e.g. minimum or maximum of spectra)

- Chemometric Features (e.g. linear transformation)

- Spectral Index Features (e.g. normalized difference vegetation index)

Feature Operation

- algebraic operation (e.g. addition or multiplication)

- logical operation (e.g. AND or OR)

- comparison operation (e.g. less-than, equal-than)

Hyperspectral data processing – Functional Modules:

The following illustration allows an overview based on an abstraction through functional modules.

Instrument Abstraction

Dependent on the acquisition technology, data are provided by the instrument (camera) in different formats. This functional module makes an instrument compatible by abstraction of its acquisition technology.

Instrument Standardization

Interferences caused by the acquisition technology are suppressed and hyperspectral data are corrected. These disorders are depending on the chosen instrument hardware. Such disorders could be:

- read-out noise

- dark current noise

- defect pixels

- wavelength calibration

- ...

Disturbing Influence Suppression

Interferences like the non-uniformity of illumination, which are caused by the measuring setup, are suppressed and data are corrected with regards to application relevant needs.

Hyperspectral Pre-processing

Application of typical (scientifically and industrially established) pre-processing methods to hyperspectral data like filtering, derivative, normalization, etc.

Hyperspectral Feature Extraction and Operation

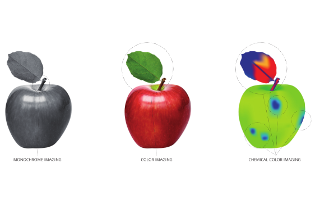

By Hyperspectral features, information hidden in a spectral curve is described by a single value per pixel. This process leads to dimensional reduction e.g. from a 3 dimensional hyperspectral cube to a 2 dimensional feature image.

Advantages:

- The description of information by feature values is more specific and more understandable compared to investigation into a spectral curve.

- Feature operation often leads to the calculation of new (dependent) features as a function of base features. Often the result is a decision or a class-information like: “spectra are oversteered” or “a spectrum is similar to a referenced material”.

Output Interfacing

Information gained per object pixel is prepared to be compliant to machine vision standard formats.

Supported information formats are:

- Color information (3 values per object pixel)

- Multiple feature information (n values per object pixel)

- Decision information (1 value per object pixel)